Deep Sensorimotor Control by Imitating Predictive Models of Human Motion

Leveraging visual understanding of motion for policy learning

Every day, terabytes of video capture how humans interact with the world — opening doors, cooking meals, stacking boxes. This data gives away a lot of detail about what we want our robots to do. The guiding question in this project is: What is the most scalable way to utilize this data for robot learning?

The most common paradigm for learning from human data is real2sim, which seeks to replicate the environment in which humans operate by estimating its geometry and physical parameters. The resulting digital twin is necessary to map human motion to robot motion in the same scene as the video. In its full generality, this is a very hard inverse problem to solve. While working on HOP

This work presents an approach to circumvent these methods all together. We seek to unify all human video data into one neural network and subsequently use it for policy learning. Specifically, we train a model to predict future human keypoint trajectory given the current scene and task and then use it as a reward model. Such a reward model incentivizes a robot to track the motion of humans’ end effectors (hands!), given the observations and task. This learned reward, combined with a simple, sparse task-specific reward, is then used to train sensorimotor policies via massively parallelized reinforcement learning in simulation.

As the human dataset grows, we expect the reward model to capture human motion for a lot of diverse tasks and scenes. The knowledge encoded in this model enables large-scale policy training with reinforcement learning by eliminating the need for per-task manual reward design. Indeed, as demonstrated in our experiments, a single reward model suffices to train policies across diverse tasks and robot embodiments.

The reward model

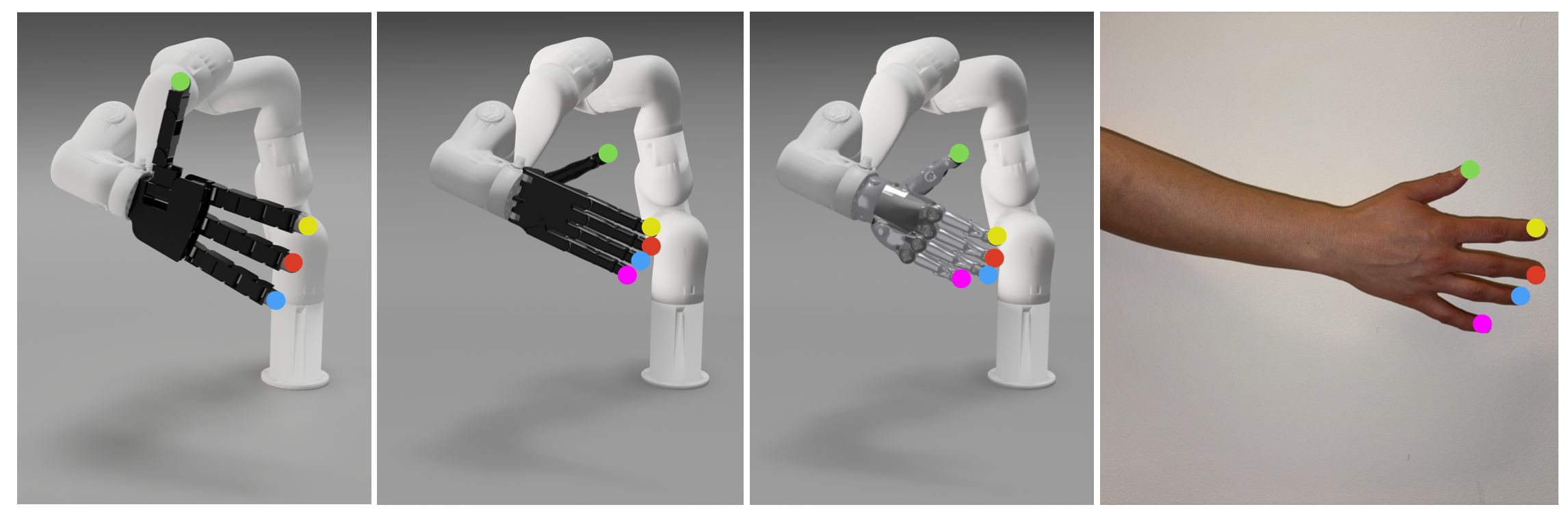

The goal of the reward model is to guide robot policies to behave similarly to humans in the robot’s environment. This reward model is trained on human data, but tested on robot data. For this to happen zero-shot, we need an abstraction level at which robot motion and human motion are as similar as possible. For anthropomorphic robots, there is a simple and intuitive choice: the trajectories of fingertips.

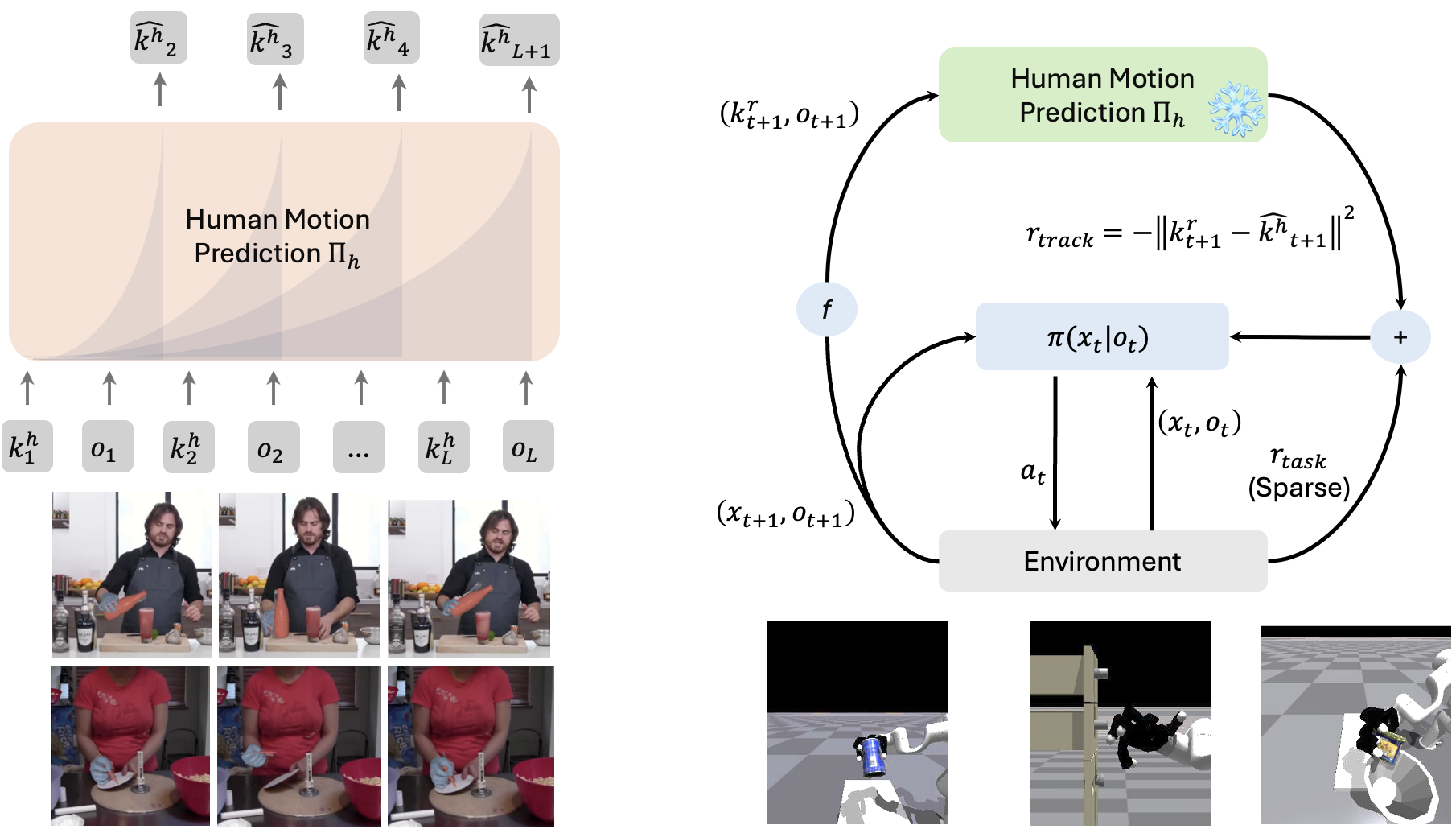

We set up our human motion model \(\Pi_{h}\) to predict the most likely future fingertips location given a history of previous fingertip positions and scene observations (Fig. X). Practically, in our implementation \(\Pi_{h}\) is a causal transformer trained on a dataset \(\mathcal{D} := {g^{(i)}, (k^{h(i)}_1, o^{(i)}_1, k^{h(i)}_2, o^{(i)}_2, \dots)}_{i=1}^n,\)

which consists of trajectories of fingertips \(k^{h}_t\) and object point cloud observations \(o_t\), as well as a goal label \(g\). This dataset contains instances of humans interacting with their surroundings to accomplish the task \(g\).

Training robot policies: \(\Pi_{h}\) as general reward

Massively parallelized reinforcement learning in simulation offers the potential to train powerful and general-purpose robot policies. Yet, current RL pipelines still require extensive manual intervention, particularly in two areas: (i) environment design and (ii) reward design. Both are challenging, and as a result, the most impressive successes to date have been limited to task-specific settings. Achieving greater generality in policy learning will require automating these components. While recent work has begun to address environment design by, e.g., using VLMs for scene generation, reward design remains largely manual (with only limited support provided by VLMs).

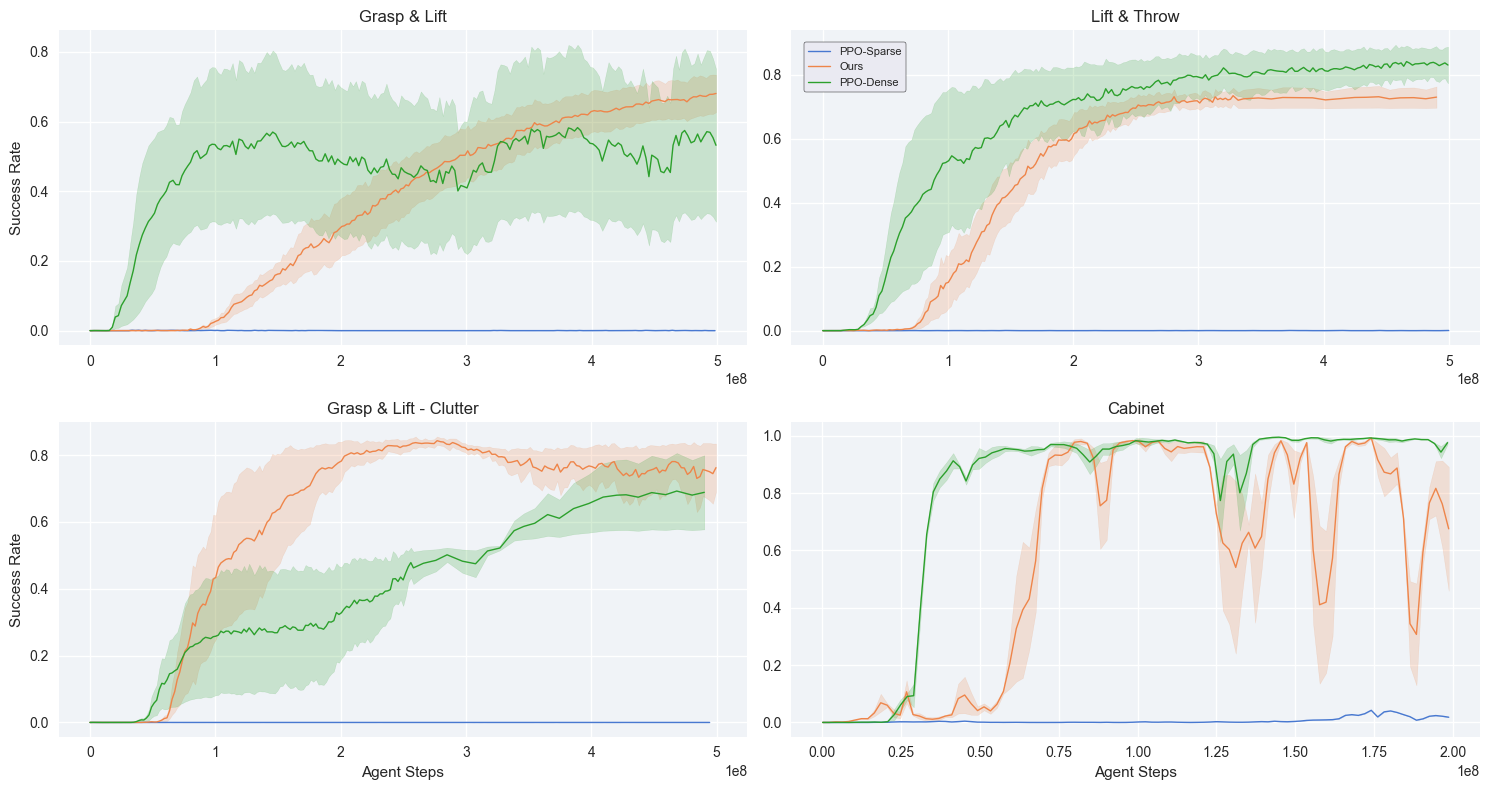

Tracking human motion is a fairly general way to specify behavior and, therefore, a viable solution to automate reward design. This is strictly more expressive than coding up a reward, in which the case the reward function is limited to analytic expressions of the available state variables. To demonstrate the applicability of our approach, we use \(\Pi_H\) as a general reward for multiple tasks and robot embodiments. Empirically, we find that training policies with rewards from \(\Pi_H\) almost matches the performance of doing RL with hand-engineered rewards (PPO-Dense).

Note that we couple \(\Pi_H\) with a robot-independent sparse task reward, measuring, for example, whether the desired object has arrived at the target location. Such a task reward is insufficient for policy training, as the performance of PPO-Sparse shows in the plot below.

What next

Our results are at the moment a proof-of-concept. Arguably, why bother training predictive models if we can design a dense reward? The real power of our approach comes with scaling up \(\Pi_h\) with internet-scale human data (e.g., videos or VR/AR applications), using RGB/RGB-D images as observations and language as goal description. This will unlock a truly general reward for arbitrary tasks and scenes.

From the modeling perspective, learning to predict changes in the environment and not just the human motion will make the reward more expressive, possibly removing the need for any other sparse reward for RL. However, that basically implies training a world model in RGB space, which is beyond our means at the moment. As open-source video models improve in quality, an approach to cheaply re-purpose them as a general reward model is a very promising direction for future work.

Acknowledgements

Viser, by Brent Yi, has been very helpful in designing custom remote visualization tools for analysis and debugging throughout this project. We would like to thank Arthur Allshire, Ankur Handa and Jathushan Rajasegaran for helpful discussions during the course of the project.